Fast Bird Part Localization for Fine-grained Recognition

1. Abstract *

In this work we have introduced a new and faster method for part localization of bird species. Part localization is an important step in fine-grained recognition as the discriminative features are highly local. State-of-the-art method of [1], uses R-CNN [2] to localize parts. R-CNN does O(1000) forward passes of the network, which makes it very slow. Comparing to the state-of-the-art [1], our method is at least 2 orders of magnitude faster while achieving comparable categorization accuracy.

2. Publication

Fast Bird Part Localization for Fine-Grained Categorization

Yaser Souri, Shohreh Kasaei

The Third Workshop on Fine-Grained Visual Categorization (FGVC3) in conjunction with CVPR 2015

3. Part Localization as Pixel Classification

3.1. Classifying pixels

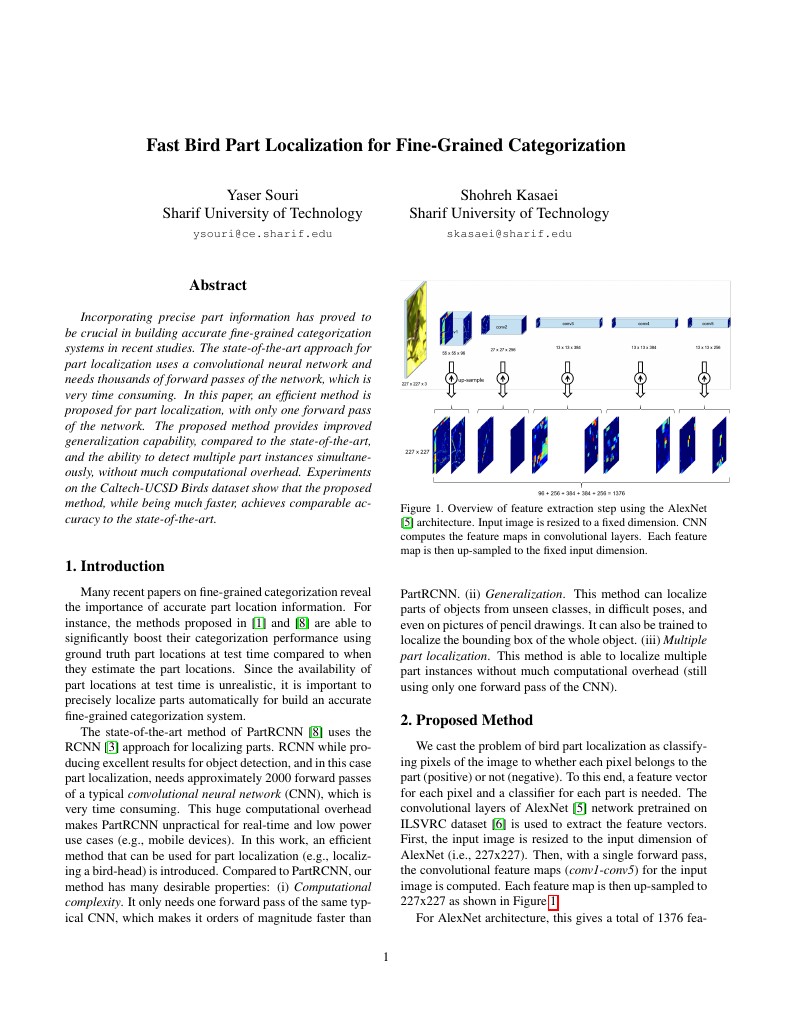

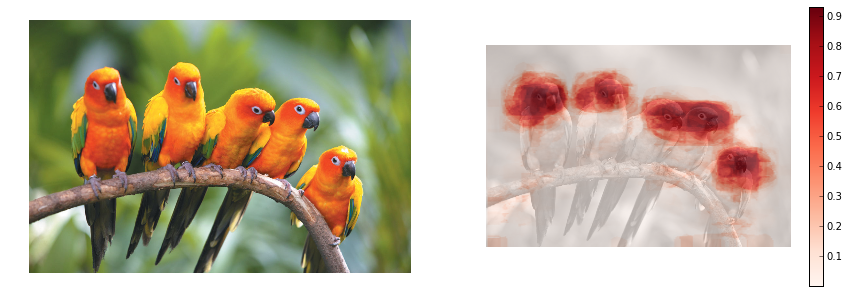

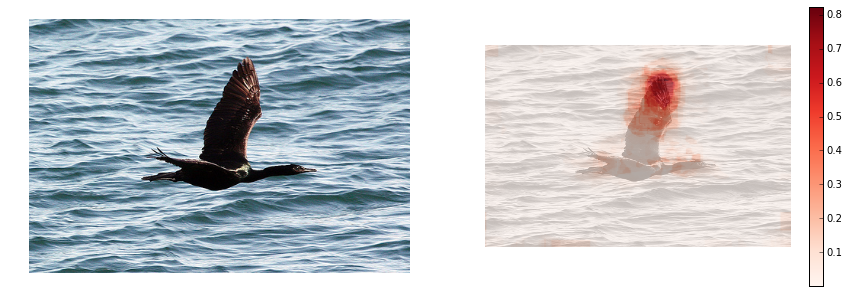

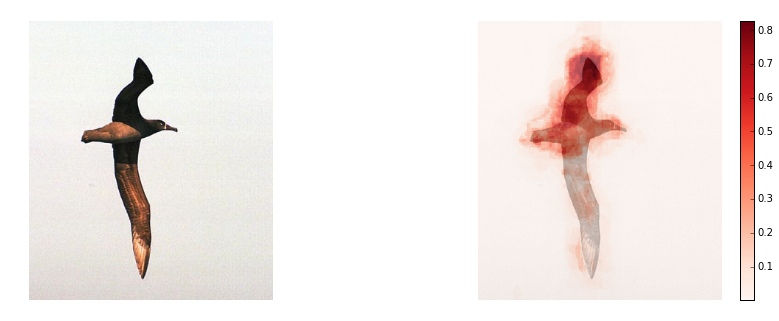

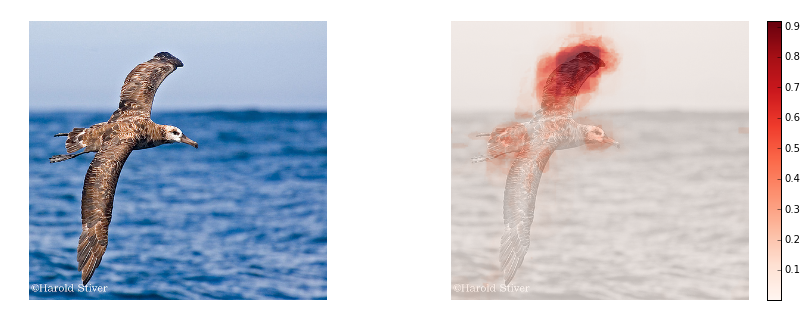

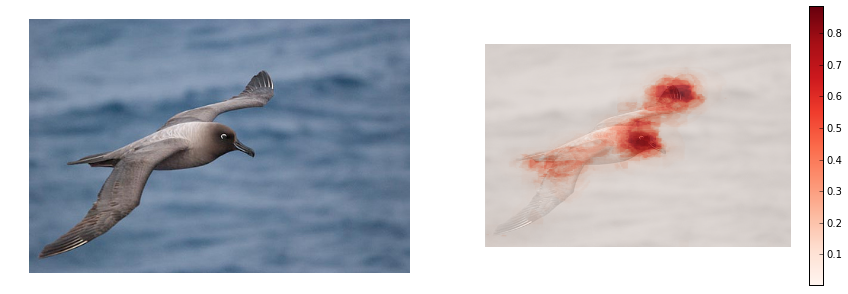

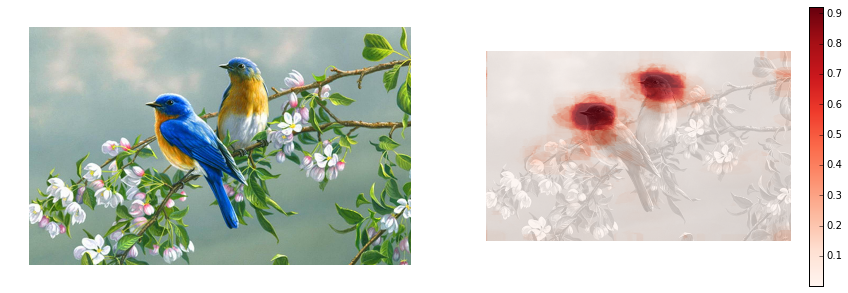

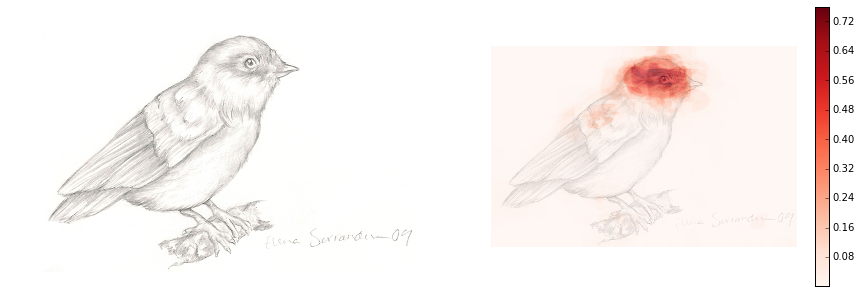

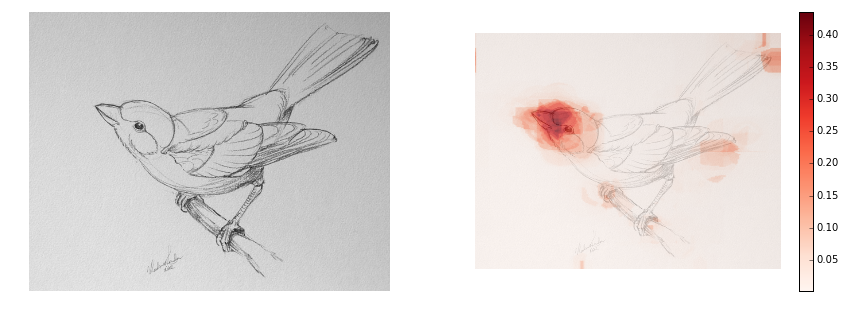

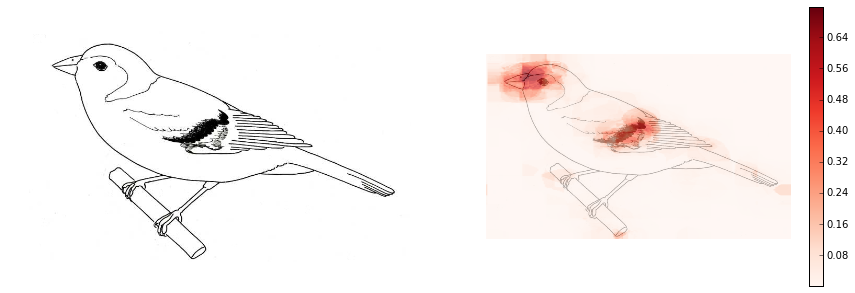

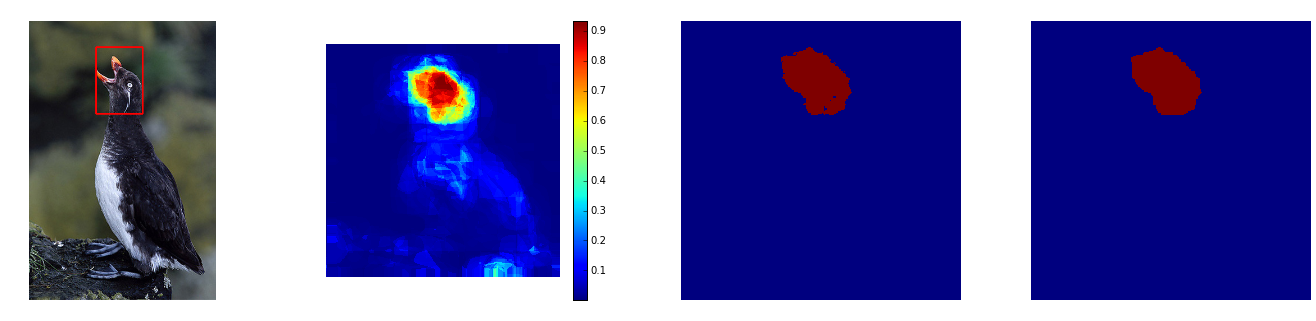

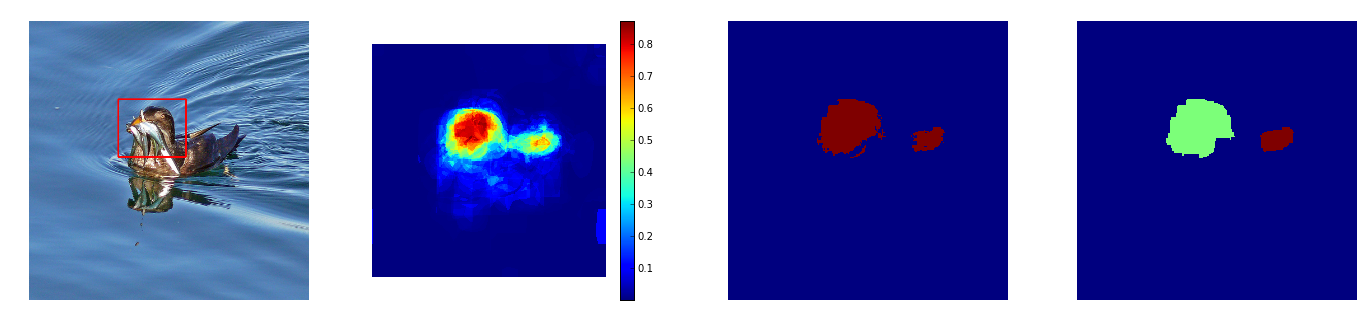

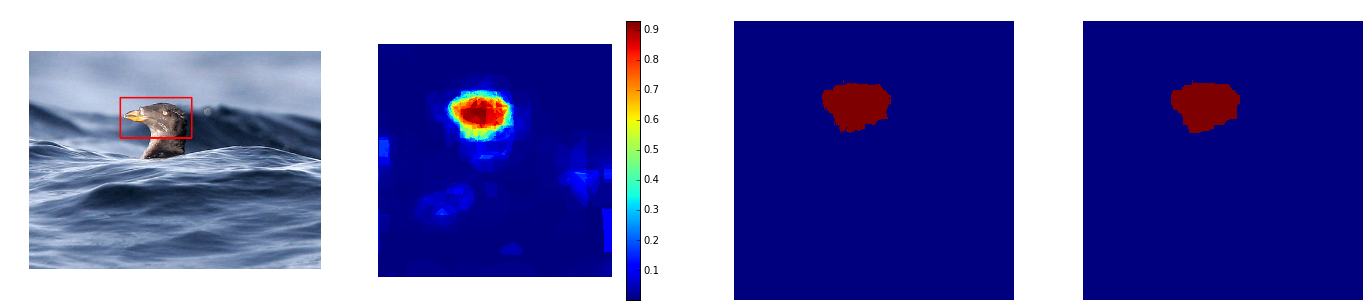

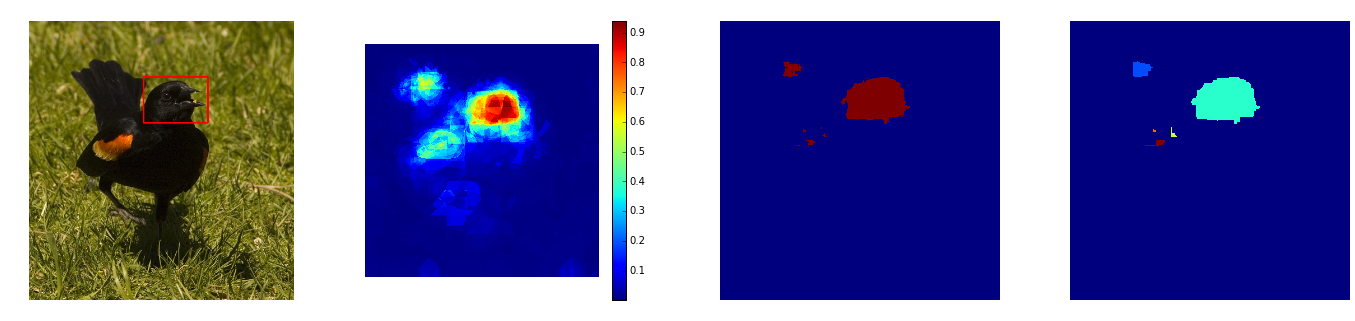

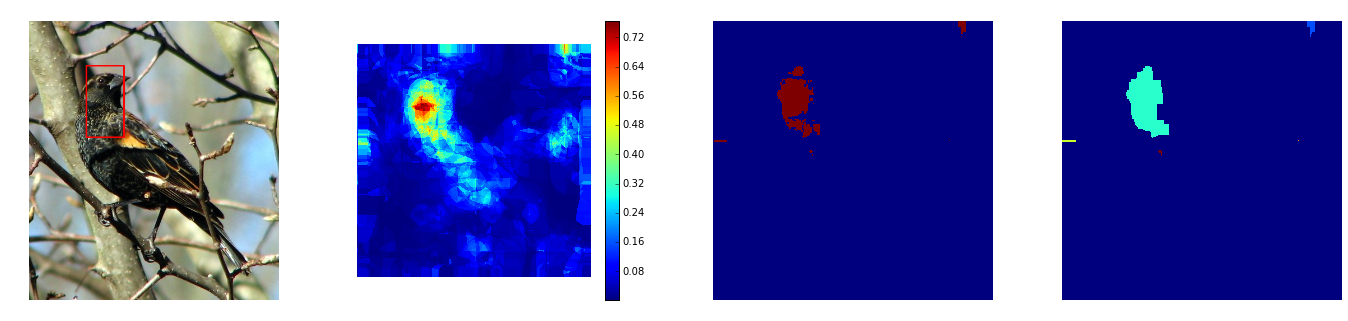

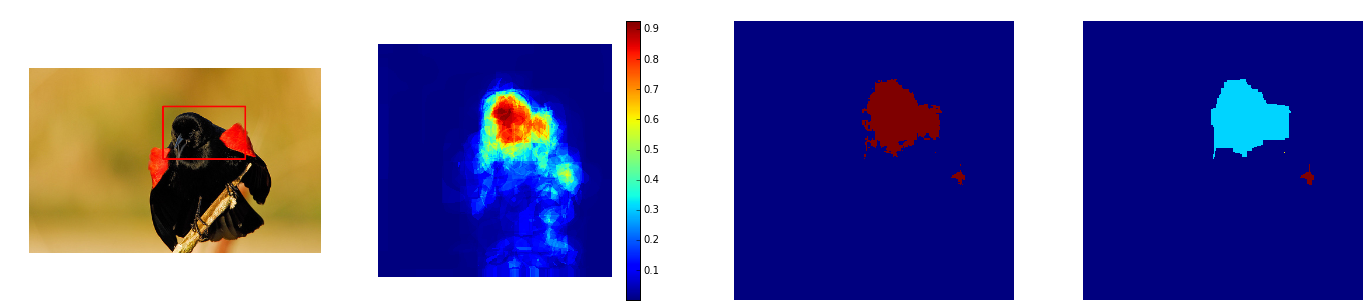

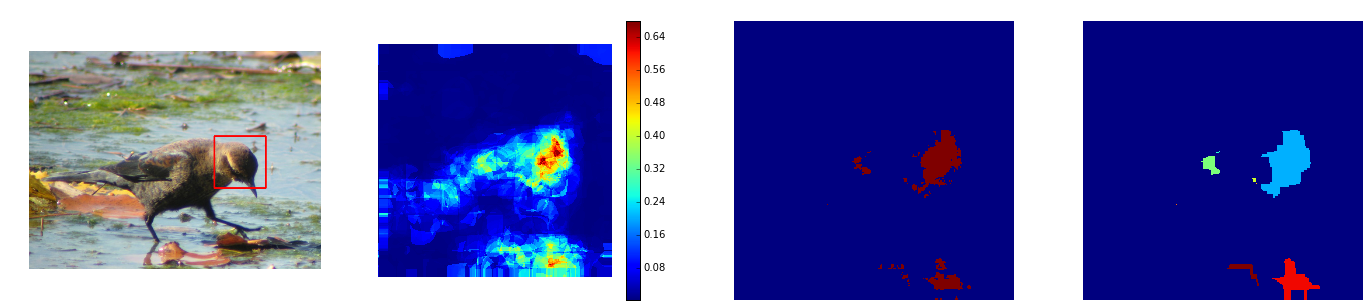

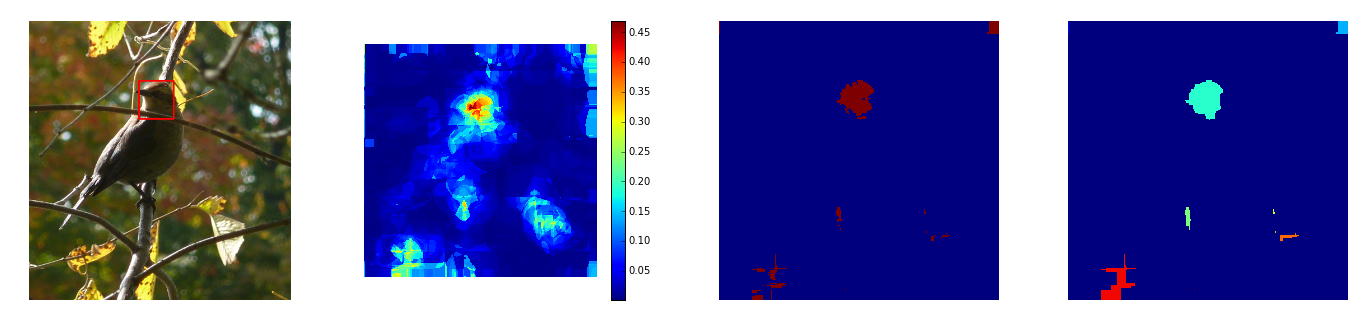

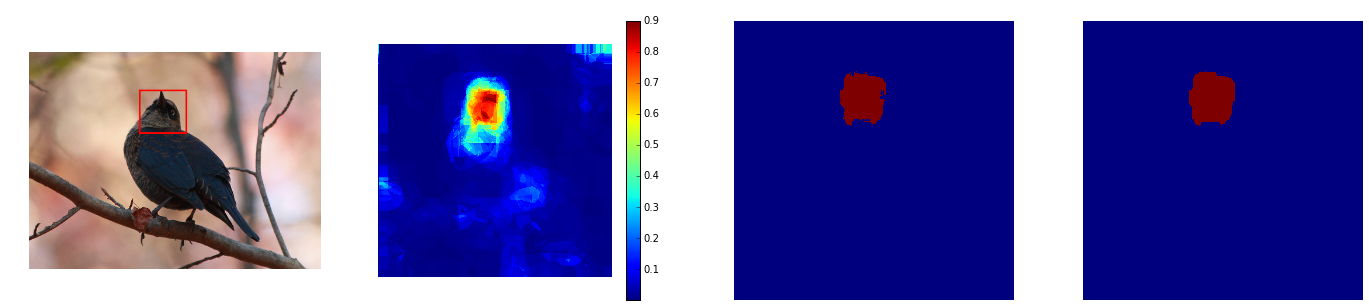

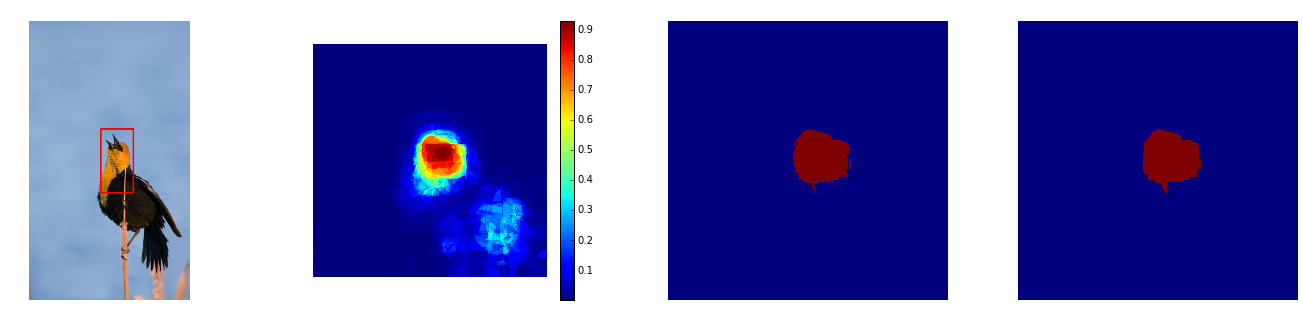

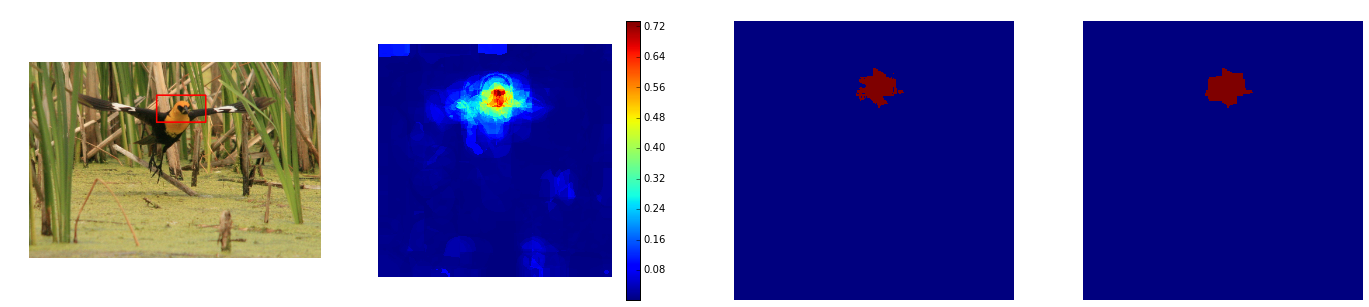

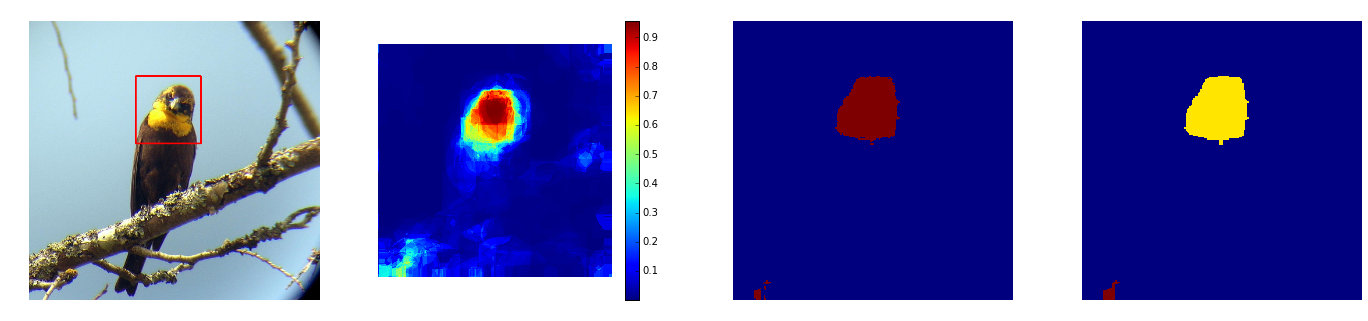

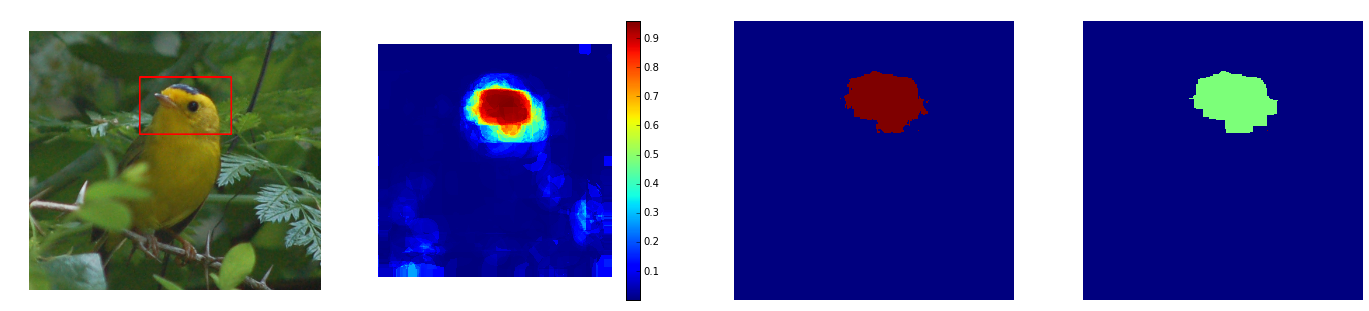

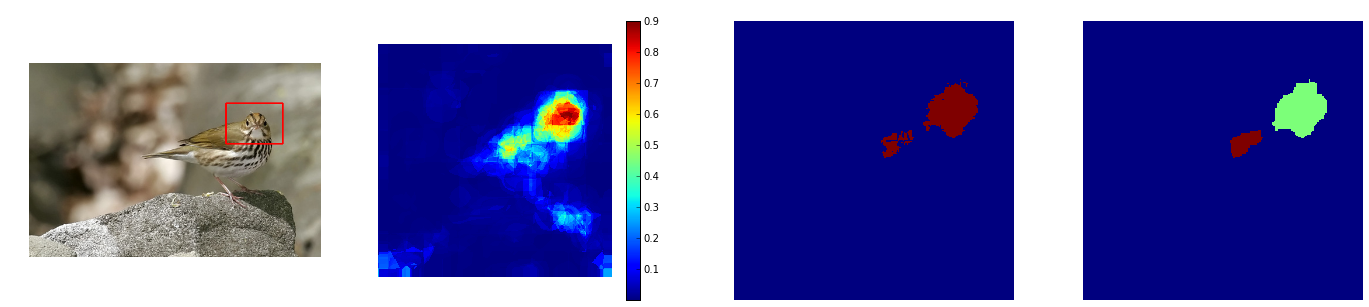

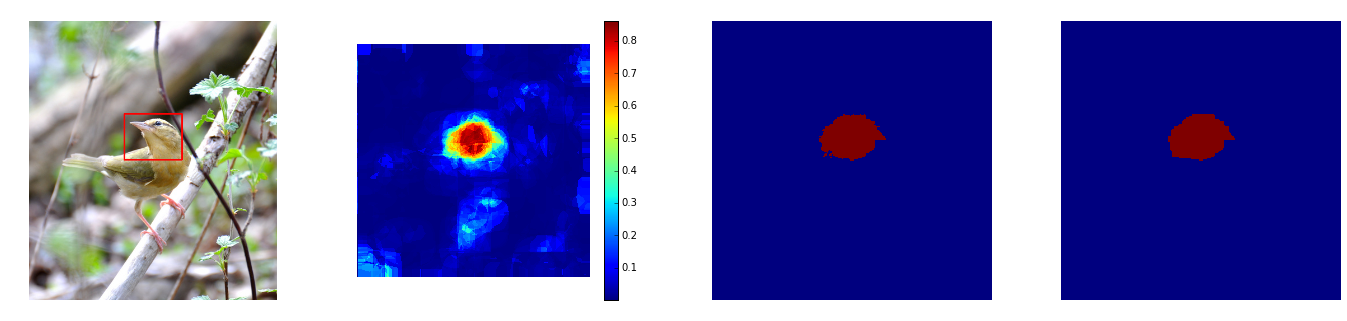

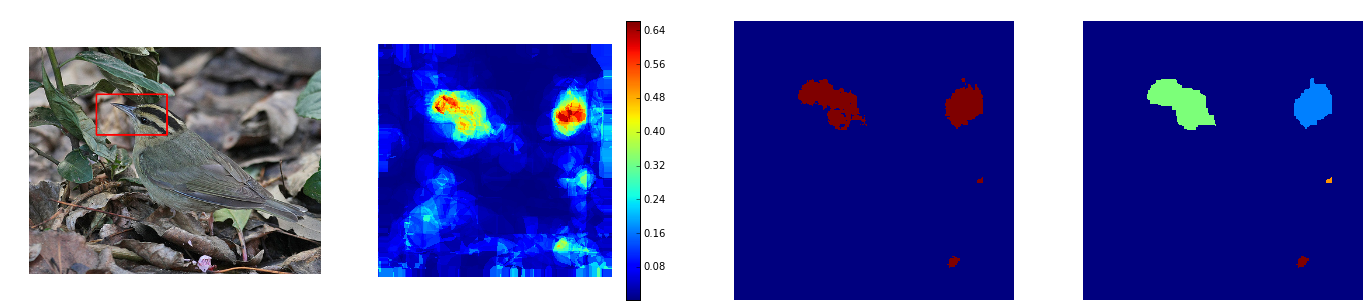

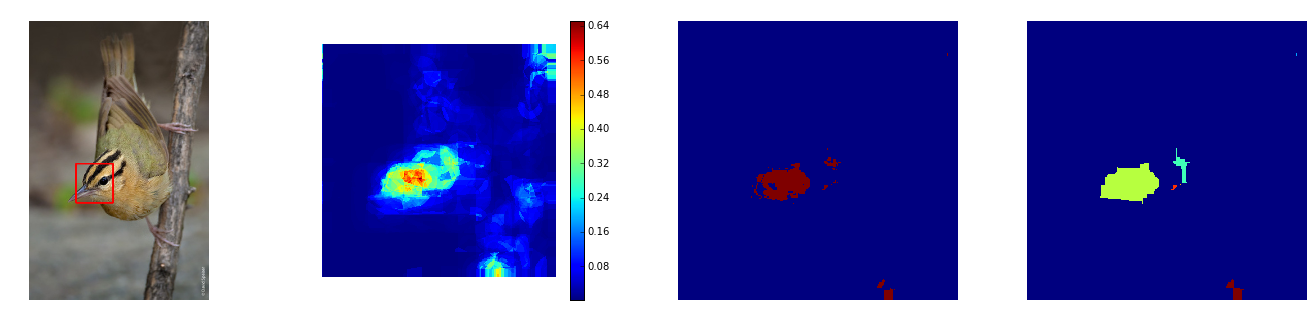

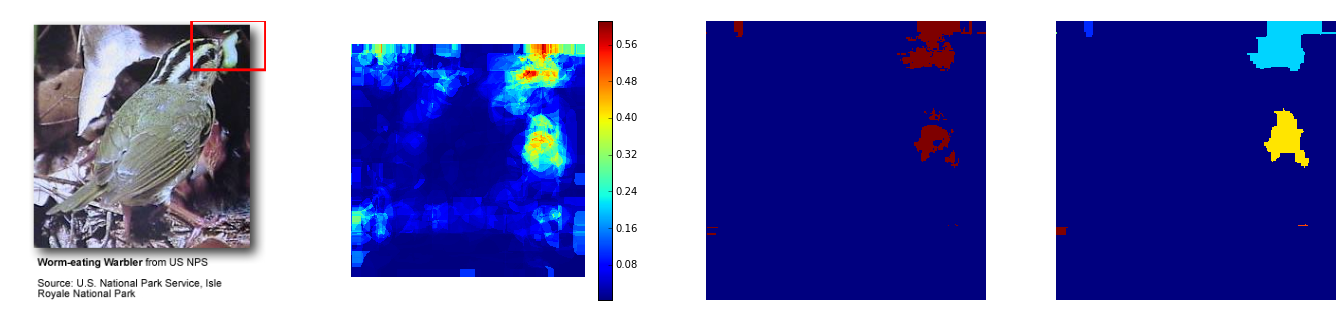

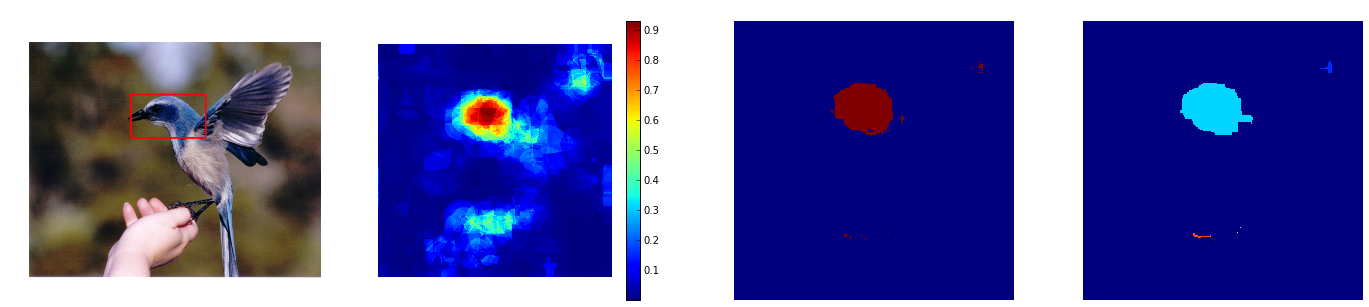

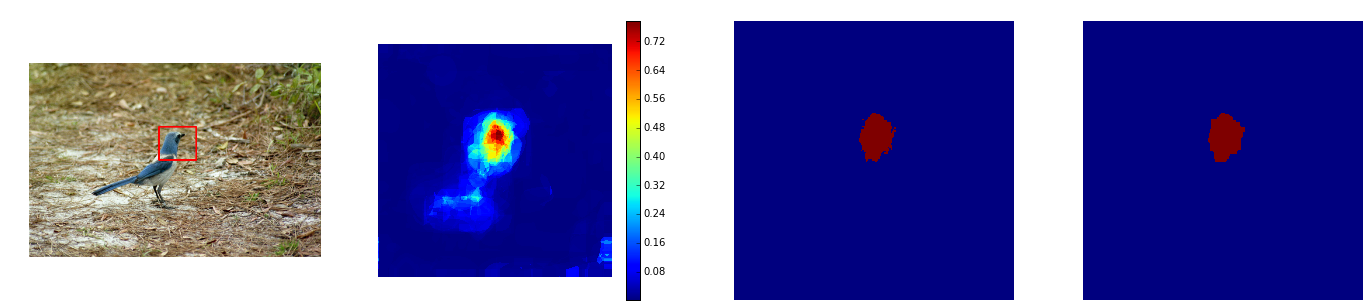

We pose the problem of finding a part as classifying the set of pixels that belong to that part. Below are example results of our system classifying pixels whether they belong to the head of a bird. The more red a pixel is the more the system thinks it belongs to the head of a bird. Exact probability values can be estimated from the color bar.

To train each part localizer (pixel classifier) we need three things:

- feature representation for each pixel

- a set of positive and negative example pixels

- a classifier

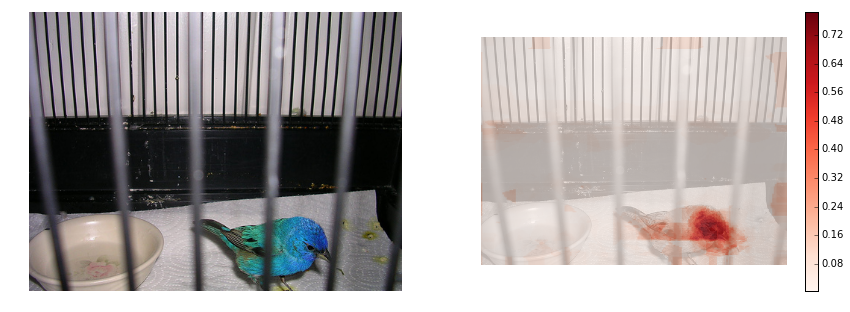

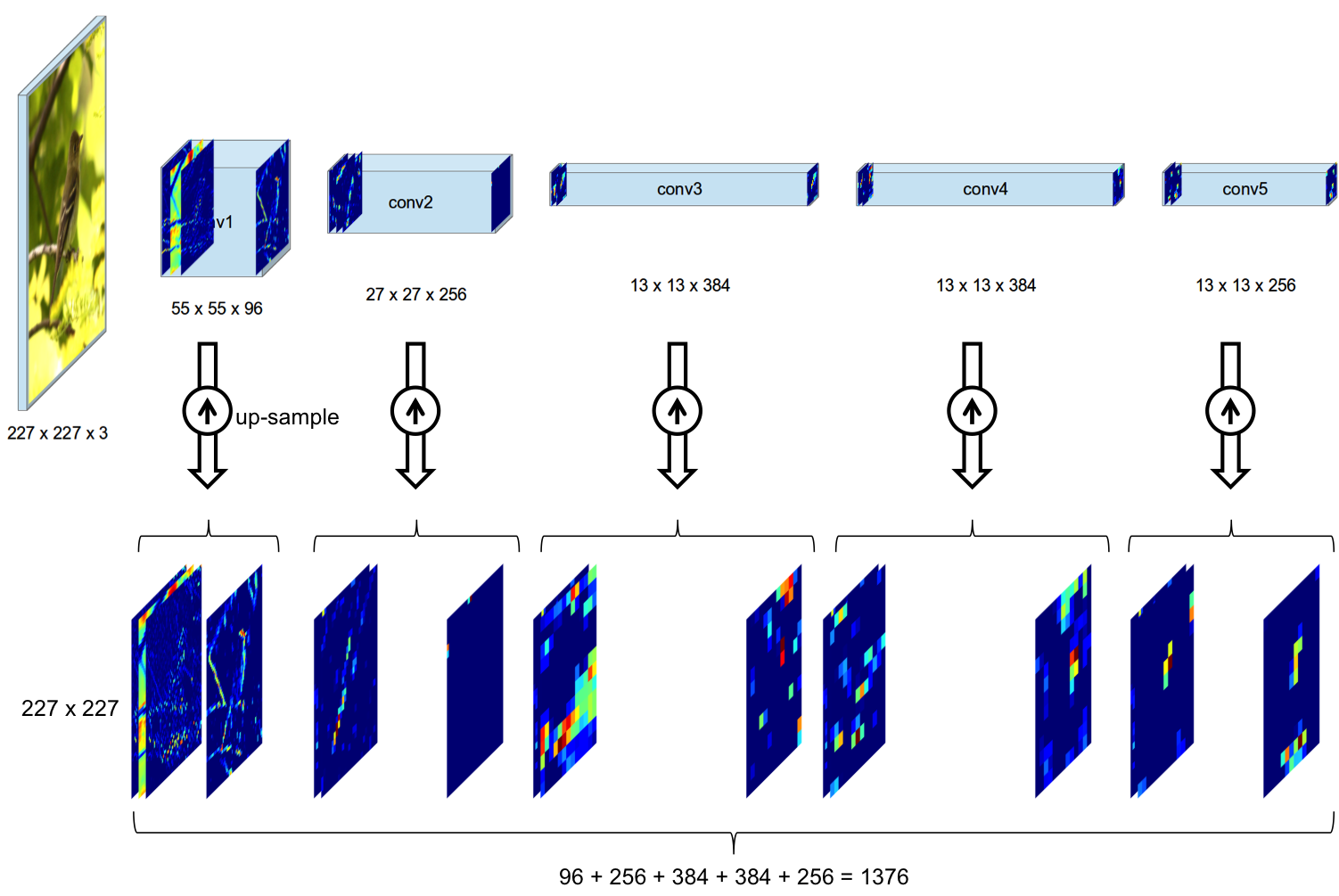

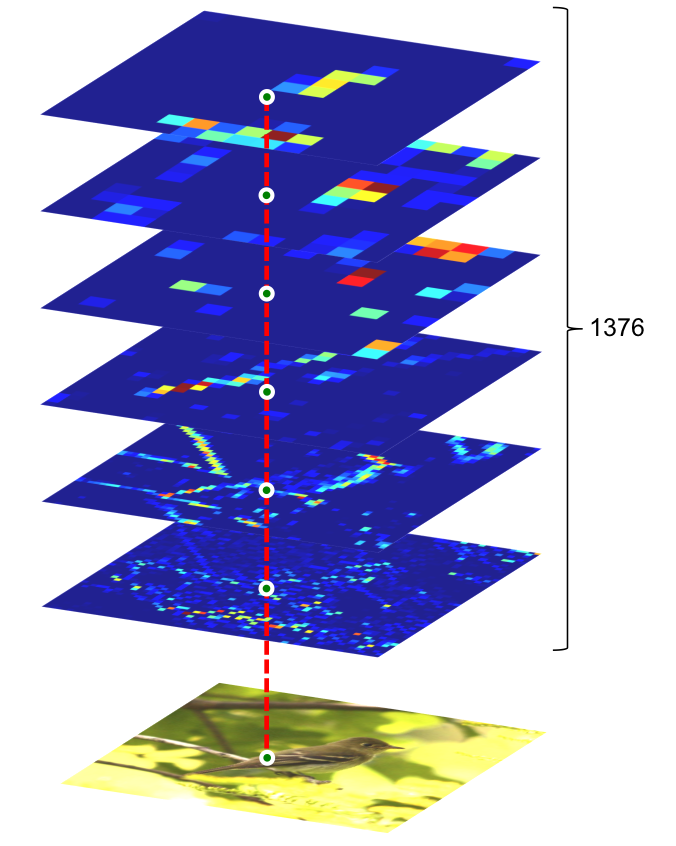

3.1.1. Feature representation for each pixel (Similart to [3])

We use an off-the-shelf Convolutional Neural Network (specifically CaffeNet an almost identical network to AlexNet) which has been pre-trained on the classification task of ILSVRC 2012 and only use a single forward pass of its convolutional layers (conv1 - conv5). After up-sampling each feature map we obtain a good feature representation for all of the pixels in the image.

3.1.2. Positive and negative training pixels

We mine a large set of positive and negative pixels for each part that we want to localize from the training set of CUB-200-2011 dataset. Notice that since the CUB dataset contains part location information we use them during training.

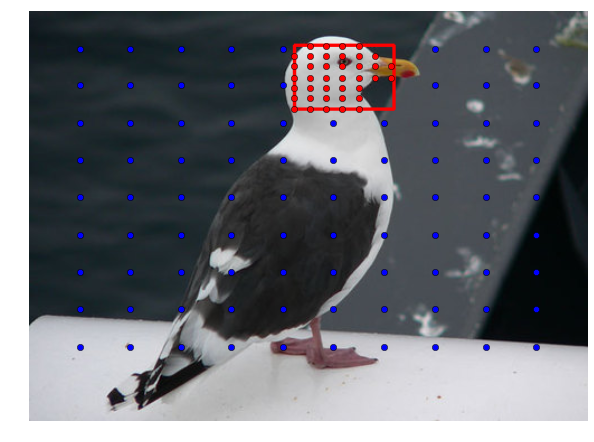

Points for head localization

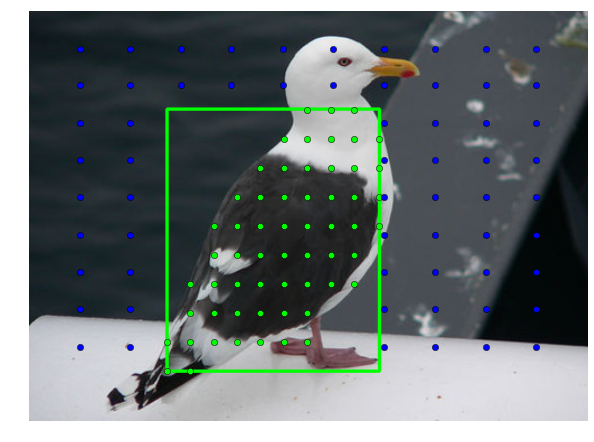

Points for body localization

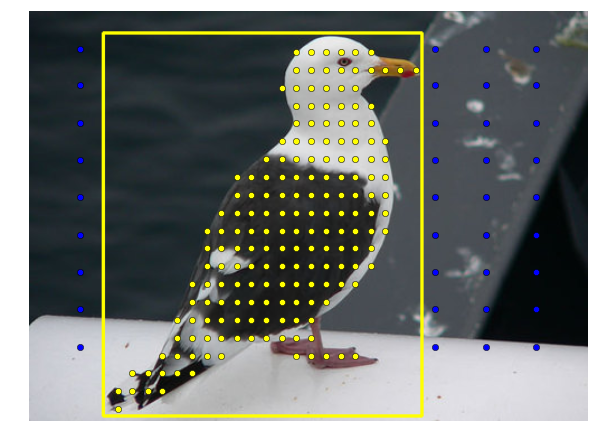

Points for bounding box localization

3.1.3. Pixel Classifier

For classifying pixels we needed a probabilistic classifier that was fast, so we choose Random Forest.

3.1.4. Results

Below are a set of results of our pixel classifier that was trained to localize head of the birds.

Success

Failure

3.1.5. Properties

Our pixel classifier has some very interesting properties.

Ability to localize multiple part instances without any computational overhead.

Ability to localize parts on pencil line drawings and cartoons.

Ability to localize parts on novel classes that the model has not seen in the training set (Since the problem is fine-grained recognition and all classes are sub-class of a general class (e.g. bird) this is expected).

Ability to detect absence of part with low probabilities.

3.2. Estimating the bounding box around each part

From these classification scores of each pixel we can easily extract the bounding box around each part easily:

- Threshold the pixel probability map

- Do some morphological filtering to remove the noise

- Find the largest connected component

- Find the box around that connected component

3.2.1. Results

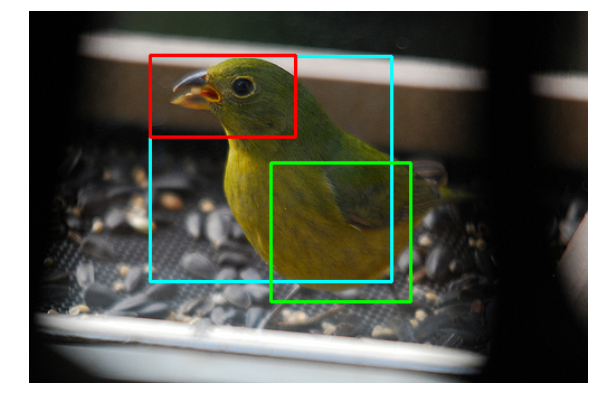

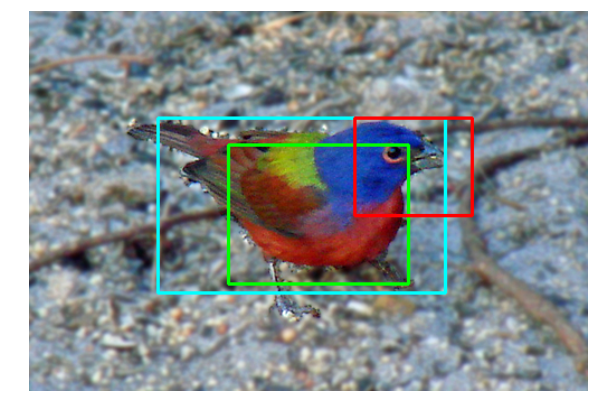

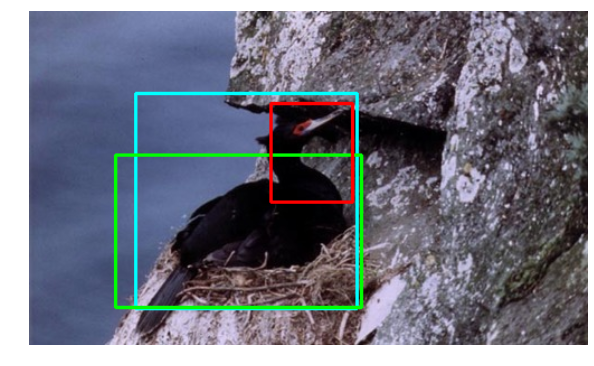

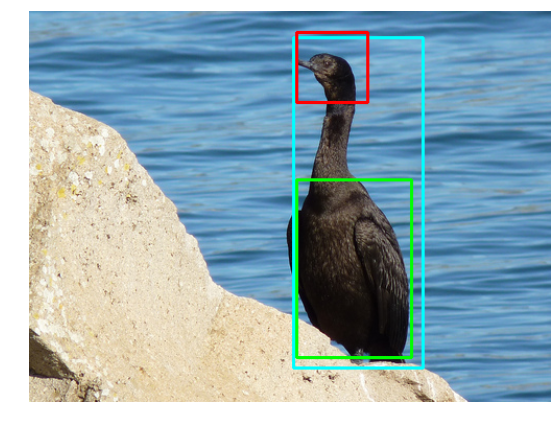

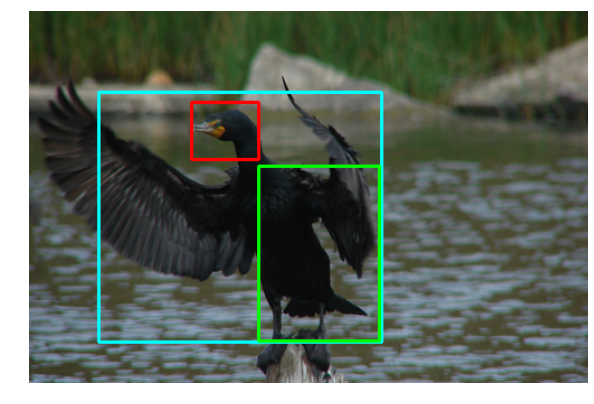

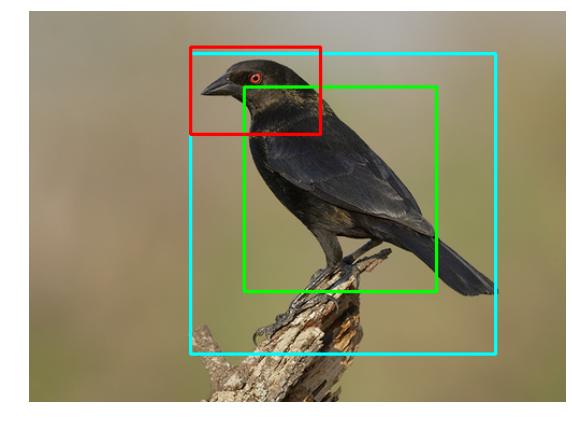

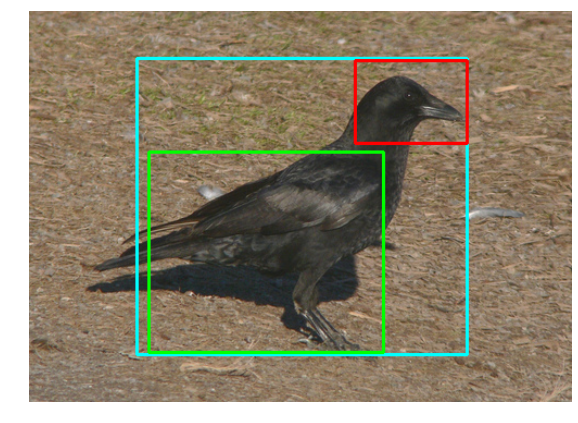

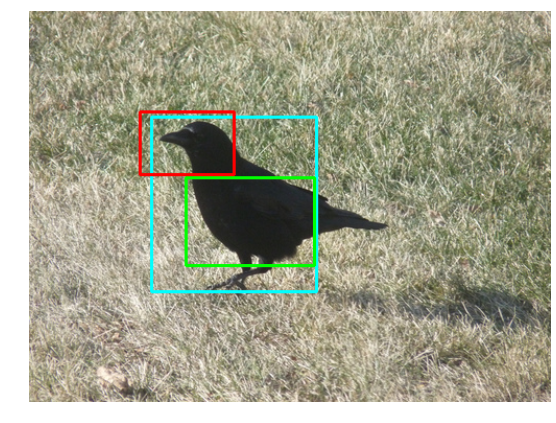

Below are a set of results of our part localizer that was trained to localize head of the birds.

Below are some images that head, body and the bounding box are localized on them using out system.

Below you can see the results of our system applied to consecutive frames of a video.

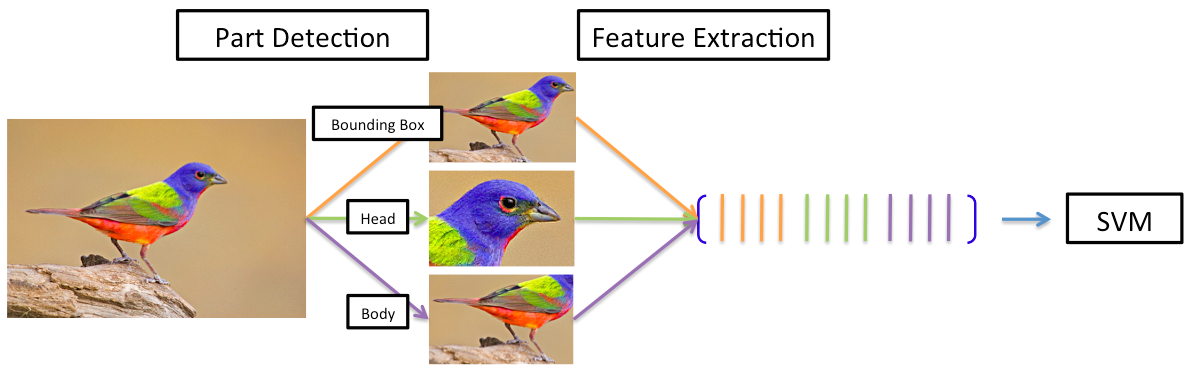

4. Fine-grained categorization

After we have localized parts we use a very similar approach to [1] for categorization. For categorization features we combine features from head, body and the bounding box of the bird. We use a fine-tuned CaffeNet network and use the fc7 data as features (same as [1]). Below you see an overview of the method.

4.1. Categorization accuracy **

| Method | Training annotations | Testing annotations | Mean accuracy |

|---|---|---|---|

| [1] | bounding box + parts | - | 73.89% |

| Ours | bounding box + parts | - | 72.02% (+-0.33) |

5. References and Notes

* Some facts might be old, since few months have past since this work was published.

** As other works on the same dataset has indicated, using a better network like VGG-19 can dramatically improve the performance. Using VGG-19 we can improve the performance to 82% mean accuracy. This work unfortunately cannot be extended to datasets where part location annotation is not available. This indeed is the main limitation of this work.

[1] Zhang, Ning, et al. "Part-based R-CNNs for fine-grained category detection." ECCV 2014.

[2] Girshick, Ross, et al. "Rich feature hierarchies for accurate object detection and semantic segmentation." CVPR 2014.

[3] Hariharan, Bharath, et al. "Hypercolumns for object segmentation and fine-grained localization." CVPR 2015.